Now Reading: Using AI to scan the Earth

-

01

Using AI to scan the Earth

Using AI to scan the Earth

Each year, SpaceNews selects the people, programs and technologies that have most influenced the direction of the space industry in the past year. Started in 2017, our annual celebration recognizes outsized achievements in a business in which no ambition feels unattainable. This year’s winners of the 8th annual SpaceNews Icon Awards were announced and celebrated at a Dec. 2 ceremony hosted at the Johns Hopkins University Bloomberg Center in Washington, D.C. Congratulations to all of the winners and finalists.

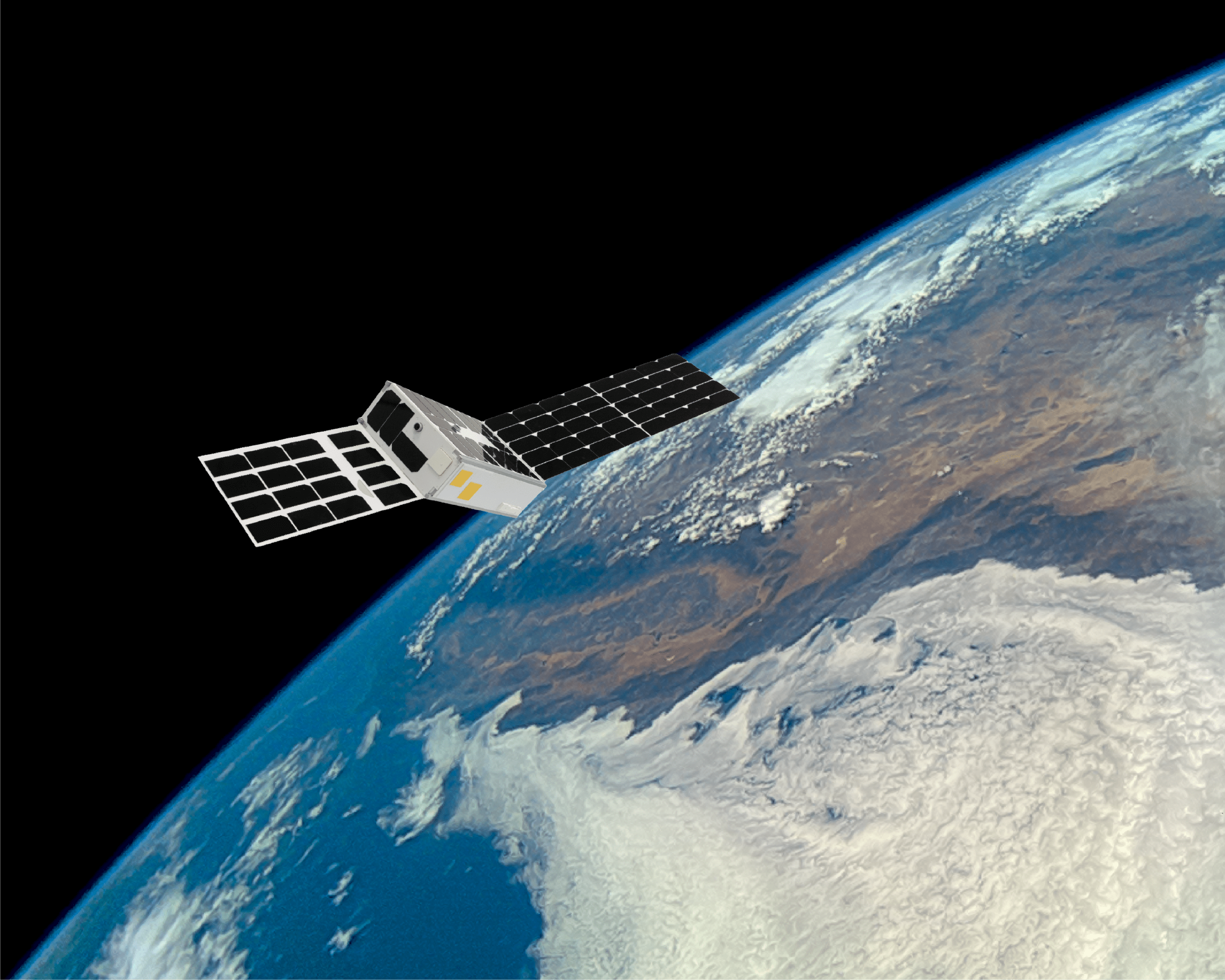

At Earth’s mid-latitudes, which are home to most economic activity, clouds cover two-thirds of the surface at any point in time. As a result, Earth observation satellites scanning the surface to gather imagery in wide swaths or pointing cameras at specific sites often produce pictures obscured by clouds.

Earlier this year, the NASA Jet Propulsion Laboratory demonstrated a way to help solve that problem. A research experiment called Dynamic Targeting showed that a satellite could look ahead along its orbital path and analyze the results before gathering imagery.

“We believe that in the future every single space mission will operate this way,” Steve Chien, JPL principal investigator for Dynamic Targeting, said in 2024 talk at Northeastern University’s Institute for Experiential AI.

Dynamic targeting promises to make satellites more flexible and efficient, its leaders said. Satellite sensors paired with AI algorithms trained to spot thermal anomalies could task cameras on the same spacecraft or others to zoom in for a closer look at a potential wildfire or volcanic eruption. Similarly, tailored algorithms could help satellites reveal deep convective ice storms, which produce heavy precipitation, turbulence and lightning.

“We’re looking for specific things we’re interested in,” Chien said in an interview. “We’re not just taking pictures of everything. That’s a big change.”

Researchers began assembling the building blocks for Dynamic Targeting more than a decade ago. But it took advances in artificial intelligence and space-based edge processing to make it work.

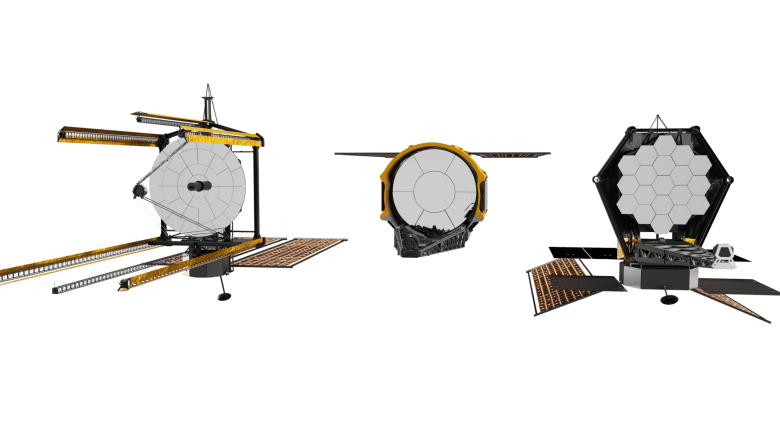

The demonstration came together when JPL began working with U.K. startup Open Cosmos and Irish startup Ubotica Technologies. In July, a hyperspectral sensor on Open Cosmos’ CogniSat-6 scanned the horizon before the Ubotica payload ran the JPL algorithm to identify clouds and figured out how to turn the sensor to gather cloud-free images.

Time was of the essence because the satellite, at an altitude of 500 kilometers, was racing over the ground at a speed of 7.5 kilometers per second. To point the camera accurately, the Dynamic Targeting algorithm also considered Earth’s rotation and curvature.

“We have to interpret the imagery, extract the information, figure out how to change the instrument pointing and then collect the data in 50 to 90 seconds,” Chien said.

Further complicating the demonstration was the single sensor. JPL’s initial concept for Dynamic Targeting called for a satellite with a look-ahead sensor and a second sensor pointed down. Since CogniSat-6 had a single camera, it looked ahead for clouds before slewing to point down and left or right.

“It’s real satellite autonomy,” said Aubrey Dunne, Ubotica co-founder and chief technology officer. “It’s a satellite capturing data, making a decision on its own, without human in the loop, and acting on that decision as well.”

This article first appeared in the December 2025 issue of SpaceNews Magazine.

Stay Informed With the Latest & Most Important News

Previous Post

Next Post

-

01Two Black Holes Observed Circling Each Other for the First Time

01Two Black Holes Observed Circling Each Other for the First Time -

02From Polymerization-Enabled Folding and Assembly to Chemical Evolution: Key Processes for Emergence of Functional Polymers in the Origin of Life

02From Polymerization-Enabled Folding and Assembly to Chemical Evolution: Key Processes for Emergence of Functional Polymers in the Origin of Life -

03Thermodynamic Constraints On The Citric Acid Cycle And Related Reactions In Ocean World Interiors

03Thermodynamic Constraints On The Citric Acid Cycle And Related Reactions In Ocean World Interiors -

04Φsat-2 begins science phase for AI Earth images

04Φsat-2 begins science phase for AI Earth images -

05Hurricane forecasters are losing 3 key satellites ahead of peak storm season − a meteorologist explains why it matters

05Hurricane forecasters are losing 3 key satellites ahead of peak storm season − a meteorologist explains why it matters -

06Binary star systems are complex astronomical objects − a new AI approach could pin down their properties quickly

06Binary star systems are complex astronomical objects − a new AI approach could pin down their properties quickly -

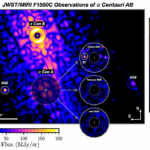

07Worlds Next Door: A Candidate Giant Planet Imaged in the Habitable Zone of α Cen A. I. Observations, Orbital and Physical Properties, and Exozodi Upper Limits

07Worlds Next Door: A Candidate Giant Planet Imaged in the Habitable Zone of α Cen A. I. Observations, Orbital and Physical Properties, and Exozodi Upper Limits