Now Reading: AI could deliver insights when paired with (the right) humans

-

01

AI could deliver insights when paired with (the right) humans

AI could deliver insights when paired with (the right) humans

ST. LOUIS – Artificial intelligence combined with human insight promises to transform geospatial intelligence, experts said at the GEOINT Symposium 2025.

But it will require standards, data verification and monitoring to ensure accurate results, they said.

“It is a competitiveness issue in national security,” said Abe Usher, the co-CEO of Black Cape. “Humans plus AI absolutely will replace humans without AI.”

Reinventing Geospatial CEO Stephan Gilotte added that true understanding or insights will be achieved when “the humans do what they’re good at and the machines do what they’re good at.”

With the latest AI tools, human-machine teaming may sound simple. It’s not.

People will need to collaborate to establish the metrics, benchmarks, standards and best practices, so that eventually, even when it’s AI and human, “we’re not being misled by the AI, but actually generally supported,” said Nadine Alameh, Taylor Geospatial Institute executive director.

It’s also important to understand, verify and correctly label data and imagery that trains AI models, experts said. Is it satellite imagery, for example, or synthetic data?

“We have to describe what type of imagery we are using,” said Taegyun Jeon, SI Analytics founder and CEO. “Authenticity of the data is very important.”

Another challenge is developing the “processes, procedures and tools to monitor incoming data and monitor models to make sure they’re performing in the way that you expect,” said Don Polaski, Booz Allen Hamilton vice president of AI. As AI architectures become increasingly complex, “where models are reasoning and coming up with their own workflows, we need to think about getting the instrumentation in place to collect logs.”

At GEOINT Katrina Mulligan, OpenAI national security lead, demonstrated how ChatGPT analyzes geospatial images to identify the location. Logs running throughout the demonstration showed the clues the chatbot uncovered and how it searched the web to test its assumptions.

Neither AI tools nor human analysts tend to provide that level of transparency.

“For a long time, we as a community have relied on experts, but we didn’t necessarily ask them to show their work,” Usher said. “As we’re involved in teamwork with an AI, we can have it show its work, show its assumptions and cite the information that it’s using and the outputs that it’s presenting to us. This can open up a new form of transparency that we haven’t had as a community.”

In terms of human-AI cooperation, Polaski warned, that while some people are wary of AI, others are too trusting.

“I hear a lot about getting the existing workforce that didn’t grow up with these tools to adopt them,” Polaski said. “The bigger challenge is younger folks who have grown up with algorithms and AI and trust them implicitly. How do we make sure that the new folks know when to call the bluff of the AI?”

Stay Informed With the Latest & Most Important News

Previous Post

Next Post

-

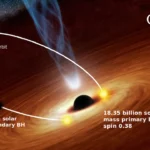

01Two Black Holes Observed Circling Each Other for the First Time

01Two Black Holes Observed Circling Each Other for the First Time -

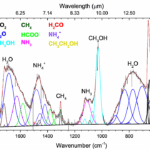

02From Polymerization-Enabled Folding and Assembly to Chemical Evolution: Key Processes for Emergence of Functional Polymers in the Origin of Life

02From Polymerization-Enabled Folding and Assembly to Chemical Evolution: Key Processes for Emergence of Functional Polymers in the Origin of Life -

03Φsat-2 begins science phase for AI Earth images

03Φsat-2 begins science phase for AI Earth images -

04Thermodynamic Constraints On The Citric Acid Cycle And Related Reactions In Ocean World Interiors

04Thermodynamic Constraints On The Citric Acid Cycle And Related Reactions In Ocean World Interiors -

05Hurricane forecasters are losing 3 key satellites ahead of peak storm season − a meteorologist explains why it matters

05Hurricane forecasters are losing 3 key satellites ahead of peak storm season − a meteorologist explains why it matters -

06Binary star systems are complex astronomical objects − a new AI approach could pin down their properties quickly

06Binary star systems are complex astronomical objects − a new AI approach could pin down their properties quickly -

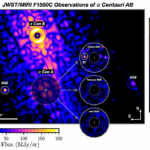

07Worlds Next Door: A Candidate Giant Planet Imaged in the Habitable Zone of α Cen A. I. Observations, Orbital and Physical Properties, and Exozodi Upper Limits

07Worlds Next Door: A Candidate Giant Planet Imaged in the Habitable Zone of α Cen A. I. Observations, Orbital and Physical Properties, and Exozodi Upper Limits