Now Reading: How time series data is fueling the final frontier

-

01

How time series data is fueling the final frontier

How time series data is fueling the final frontier

When we think of space exploration, we picture towering rockets, satellites orbiting Earth and astronaut footprints on the moon. However, behind these grand achievements lies an unsung hero: time series data. Today, as physical AI (or AI that interacts with and learns from the physical world) plays a growing role in space missions, the ability to capture and manage that data at scale has become mission-critical.

Tracking change, powering flight

At its core, time series is a record of how things change over time. In aerospace, that means temperatures, voltages, pressure, positioning and thousands of other readings streaming from sensors every second. These aren’t just numbers, but rather the foundation of every mission.

Time series powers everything from trajectory tracking and fuel monitoring to successful docking operations. It’s what allows ground teams to understand what’s happening onboard an aircraft that’s hundreds of thousands of miles away. It’s what is increasingly powering low Earth orbit (LEO) satellites to operate without manual intervention.

Think of time series capture and processing like the resolution of a photograph or video. Low resolution, i.e. fewer megapixels or frames per second, produces a fuzzy, less accurate view of reality. Sophisticated, highly deterministic systems require the equivalent of high-resolution imaging: dense, high-fidelity data collection to drive precise and reliable physical AI models.

When we talk about physical AI, we’re not talking about large language models. LLMs operate on a probabilistic basis, but in the physical world, you need deterministic answers. You need precision that results in a physical action: systems that detect, analyze and act based on an accurate and detailed picture of what is happening in the real world. Imagine a satellite processing telemetry, detecting a collision risk and firing thrusters to adjust its orbit.

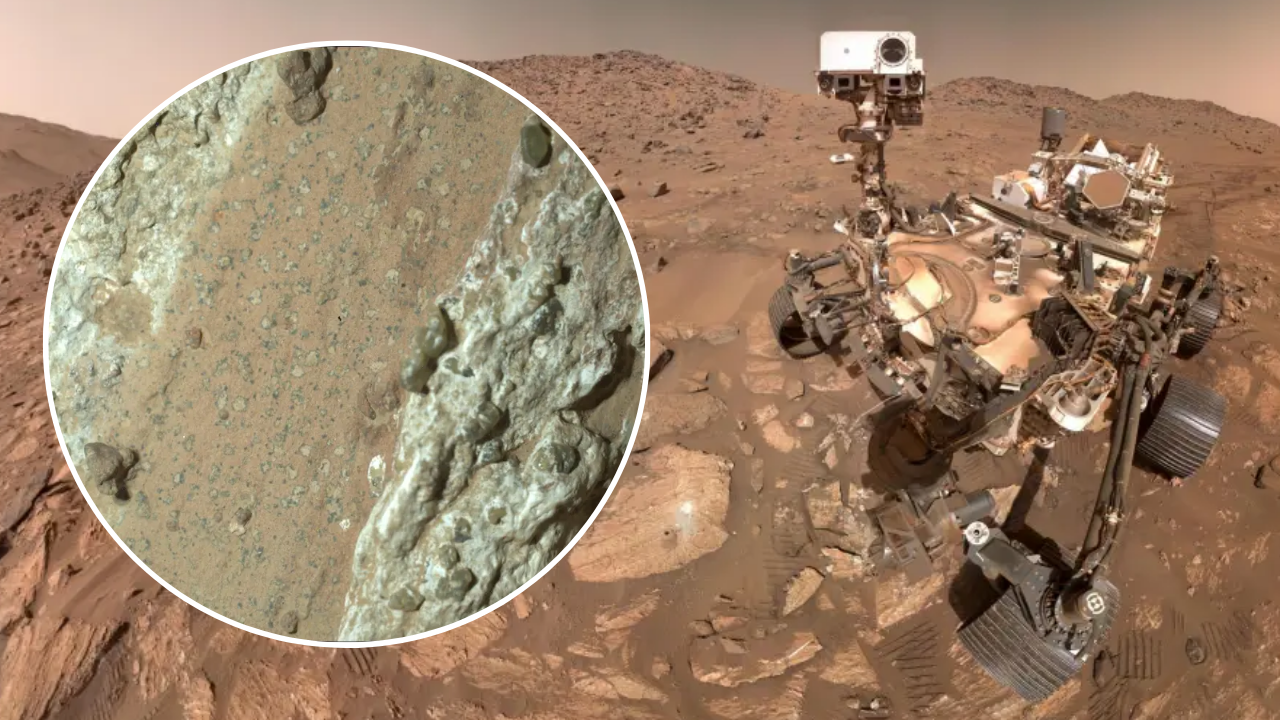

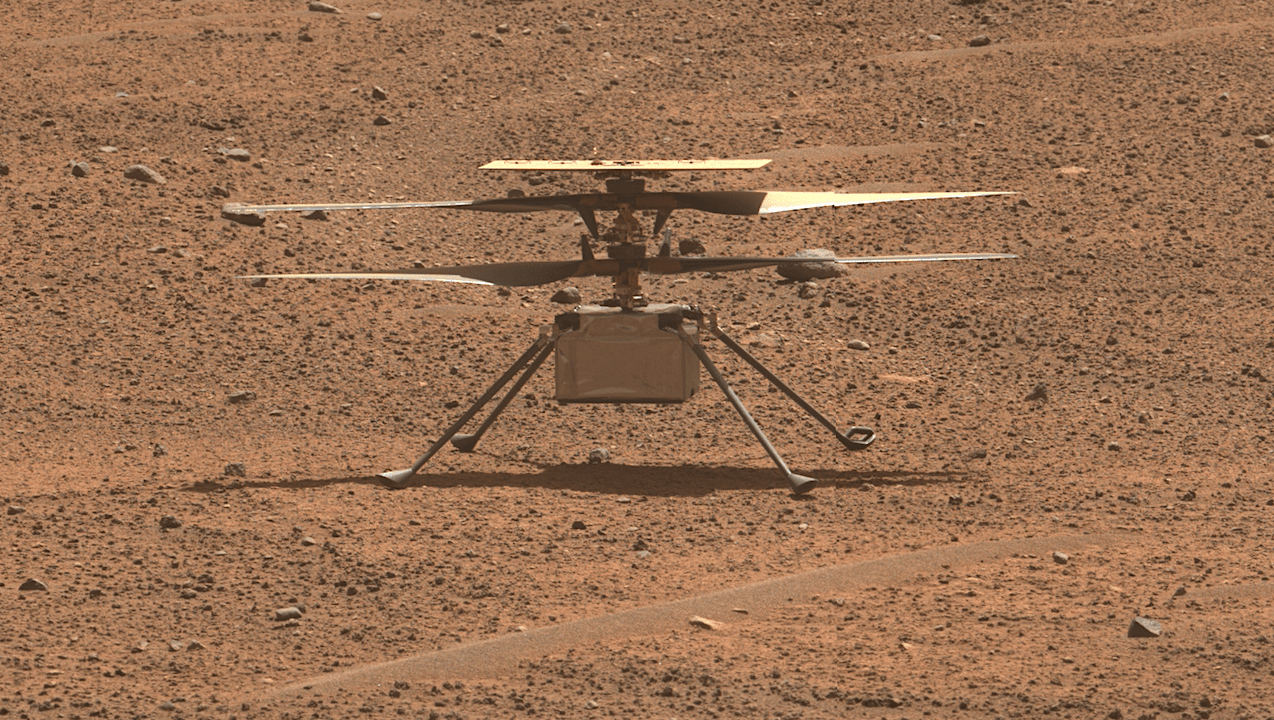

Earth observation, deep-space navigation and even unlocking discoveries on Mars all depend on physical AI that’s making decisions based on precise time series data.

The cardinality wall

Here’s where things get complicated. Aerospace environments generate what we call high-cardinality data, or datasets with a huge number of unique identifiers or tags. Tracking the altitude of each aircraft once per minute would be low-cardinality. It’s easy to store and manage, because it’s not a ton of information. But the moment you look at a single engine producing thousands of data streams, multiplied by engines, multiplied by aircraft, multiplied by flights, multiplied by conditions, you’re firmly in high-cardinality territory.

Traditional databases weren’t designed for this combinatorial explosion. And yet, this is exactly the kind of data physical AI depends on to operate safely, reliably and autonomously.

Only purpose-built time series infrastructure optimized for high ingest and high-cardinality management can deliver the precision required to give a high-resolution picture of what is happening in the real world. If you couple this with real-time querying, you are now using the sensor data (time series data) to drive an intelligent, real-world monitoring and control system for these high-value missions.

Handling stratospheric volumes of data

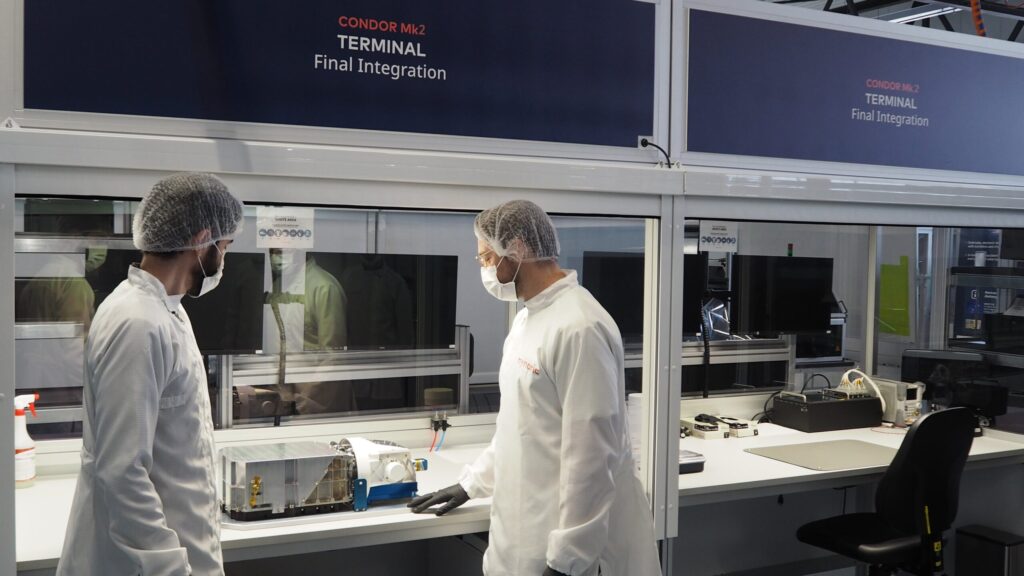

For Loft Orbital, a company building space infrastructure, the relentless ingesting of telemetry from space presented a challenge. Traditional databases couldn’t keep up with the volume, variety, or velocity of real-time satellite data. Latency, limited scale and architectural rigidity posed real operational risk.

Loft Orbital turned to a purpose-built time series database designed from the ground up for speed, scale and real-time performance. With it, engineers gained the ability to monitor satellite health in real-time, tracking parameters like power levels, thermal conditions and diagnostics with precision.

That visibility fuels smarter decisions. By analyzing both historical and real-time data, Loft Orbital uses machine learning models to predict anomalies before they escalate into critical failures, significantly reducing the risk of costly satellite downtime and making increasingly congested orbits safer. Essentially, time series is used to fuel intelligence, making the overall system smart, increasingly fault-tolerant and, over time, autonomous.

Time series, space and physical AI

Every major leap in space exploration (from robotic missions on Mars to the James Webb Space Telescope) relies on time series data. Humanity’s next great leap in space hinges on physical AI and the satellites and spacecraft that can process and act on high-resolution time series with increasing speed and precision. But there’s a catch: if aerospace keeps relying on general-purpose databases, we limit the intelligence we can build and the autonomy we can achieve. Missions remain constrained.

It’s time for aerospace organizations to examine the architecture behind their next mission and ask: what more could they learn if they went deeper? How much faster could mission-critical tasks be completed? Could they be automated? Where else could they go if they had every datapoint at their disposal? What else could they discover?

Precision in space starts on the ground

Every aerospace company is already collecting mountains of telemetry. The question isn’t whether you have it. It’s whether you’re putting it to work. The winners in the next era of space exploration will be the ones who turn that stream into real-time intelligence.

Build for scale and high fidelity: Space operations are only getting more sensor-heavy. If your data infrastructure can’t handle high-velocity, high-cardinality data today, it will become a bottleneck tomorrow. Find where you’re losing data, where precision slips, or where latency creeps in. Stress-test the workloads you know are coming and design your schemas for the tag explosion ahead. Build systems that can maintain precision as complexity grows, or prepare to pay the price when the mission is on the line.

Keep models learning: Collecting sensor data is just step one. The organizations that succeed will feed telemetry back into systems so models continuously get smarter and more precise. Begin by eliminating manual handoffs of telemetry between engineering, operations and data science. Keep models under version control and watch their performance like you’d watch your fuel gauges. Use historical telemetry to backtest rare events before you fly. That’s how you build confidence in every decision the system makes.

Tying time series to mission-critical metrics: Telemetry isn’t just a stream of numbers. It’s the heartbeat of every mission. Show how it maximizes uptime, prevents anomalies and speeds critical decisions. Give executives clear dashboards that link these metrics to mission outcomes. When leadership understands that connection, funding and focus naturally follow.

Time series has long been the quiet workhorse of aerospace. Now, with the rise of physical AI, it’s moving to the forefront. The future of space exploration depends on how well we harness it.

Evan Kaplan is the CEO of InfluxData.

SpaceNews is committed to publishing our community’s diverse perspectives. Whether you’re an academic, executive, engineer or even just a concerned citizen of the cosmos, send your arguments and viewpoints to opinion@spacenews.com to be considered for publication online or in our next magazine. The perspectives shared in these opinion articles are solely those of the authors.

Stay Informed With the Latest & Most Important News

Previous Post

Next Post

-

01Two Black Holes Observed Circling Each Other for the First Time

01Two Black Holes Observed Circling Each Other for the First Time -

02From Polymerization-Enabled Folding and Assembly to Chemical Evolution: Key Processes for Emergence of Functional Polymers in the Origin of Life

02From Polymerization-Enabled Folding and Assembly to Chemical Evolution: Key Processes for Emergence of Functional Polymers in the Origin of Life -

03Astronomy 101: From the Sun and Moon to Wormholes and Warp Drive, Key Theories, Discoveries, and Facts about the Universe (The Adams 101 Series)

03Astronomy 101: From the Sun and Moon to Wormholes and Warp Drive, Key Theories, Discoveries, and Facts about the Universe (The Adams 101 Series) -

04Φsat-2 begins science phase for AI Earth images

04Φsat-2 begins science phase for AI Earth images -

05Hurricane forecasters are losing 3 key satellites ahead of peak storm season − a meteorologist explains why it matters

05Hurricane forecasters are losing 3 key satellites ahead of peak storm season − a meteorologist explains why it matters -

06True Anomaly hires former York Space executive as chief operating officer

06True Anomaly hires former York Space executive as chief operating officer -

07Thermodynamic Constraints On The Citric Acid Cycle And Related Reactions In Ocean World Interiors

07Thermodynamic Constraints On The Citric Acid Cycle And Related Reactions In Ocean World Interiors