Now Reading: MarsRetrieval: Benchmarking Vision-Language Models for Planetary-Scale Geospatial Retrieval on Mars

-

01

MarsRetrieval: Benchmarking Vision-Language Models for Planetary-Scale Geospatial Retrieval on Mars

MarsRetrieval: Benchmarking Vision-Language Models for Planetary-Scale Geospatial Retrieval on Mars

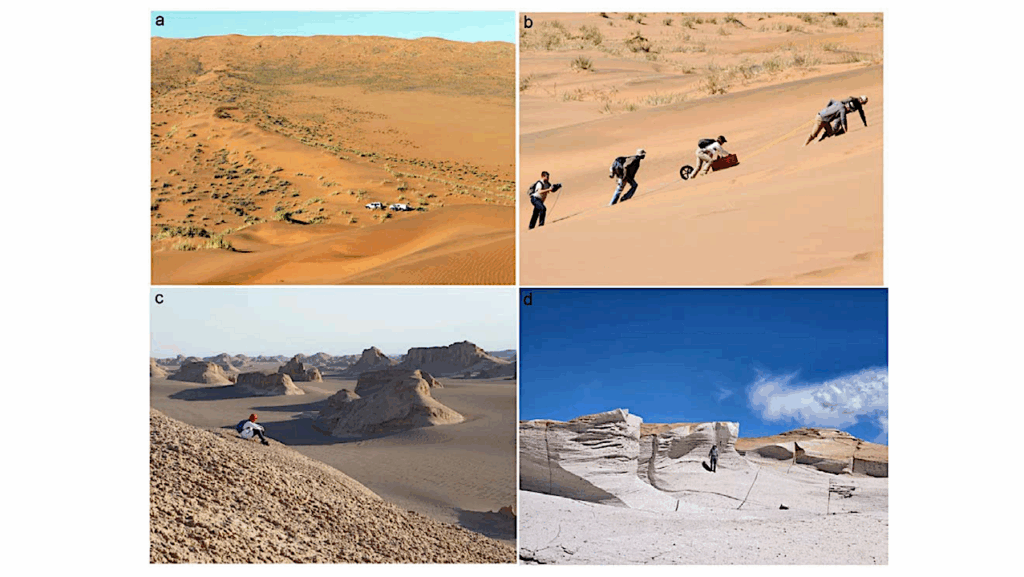

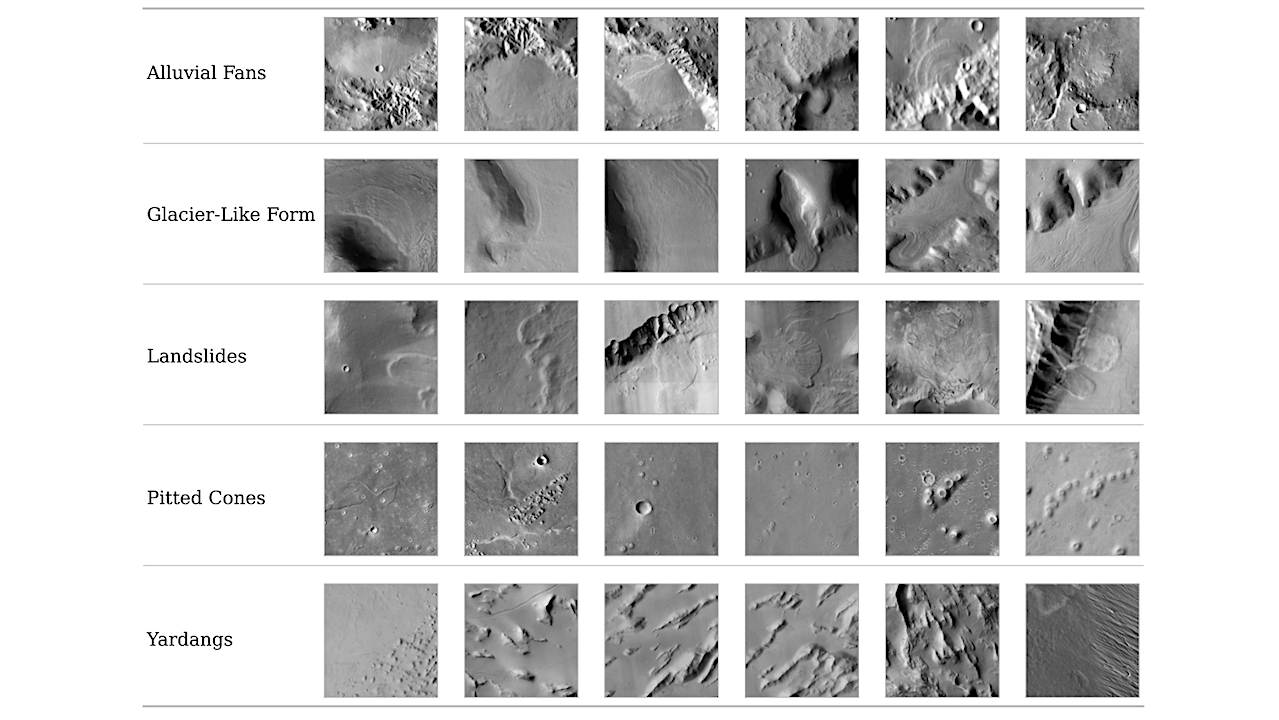

Image-based query examples for Task 3 (Global Geo-Localization) — cs.CV

Data-driven approaches like deep learning are rapidly advancing planetary science, particularly in Mars exploration. Despite recent progress, most existing benchmarks remain confined to closed-set supervised visual tasks and do not support text-guided retrieval for geospatial discovery.

We introduce MarsRetrieval, a retrieval benchmark for evaluating vision-language models for Martian geospatial discovery. MarsRetrieval includes three tasks: (1) paired image-text retrieval, (2) landform retrieval, and (3) global geo-localization, covering multiple spatial scales and diverse geomorphic origins.

We propose a unified retrieval-centric protocol to benchmark multimodal embedding architectures, including contrastive dual-tower encoders and generative vision-language models. Our evaluation shows MarsRetrieval is challenging: even strong foundation models often fail to capture domain-specific geomorphic distinctions.

We further show that domain-specific fine-tuning is critical for generalizable geospatial discovery in planetary settings. Our code is available at https://github.com/ml-stat-Sustech/MarsRetrieval

Shuoyuan Wang, Yiran Wang, Hongxin Wei

Subjects: Computer Vision and Pattern Recognition (cs.CV); Instrumentation and Methods for Astrophysics (astro-ph.IM); Computation and Language (cs.CL)

Cite as: arXiv:2602.13961 [cs.CV] (or arXiv:2602.13961v1 [cs.CV] for this version)

https://doi.org/10.48550/arXiv.2602.13961

Focus to learn more

Submission history

From: Shuoyuan Wang

[v1] Sun, 15 Feb 2026 02:41:56 UTC (5,148 KB)

https://arxiv.org/abs/2602.13961

Astrobiology, Astrogeology,

Stay Informed With the Latest & Most Important News

Previous Post

Next Post

-

01Two Black Holes Observed Circling Each Other for the First Time

01Two Black Holes Observed Circling Each Other for the First Time -

02From Polymerization-Enabled Folding and Assembly to Chemical Evolution: Key Processes for Emergence of Functional Polymers in the Origin of Life

02From Polymerization-Enabled Folding and Assembly to Chemical Evolution: Key Processes for Emergence of Functional Polymers in the Origin of Life -

03Astronomy 101: From the Sun and Moon to Wormholes and Warp Drive, Key Theories, Discoveries, and Facts about the Universe (The Adams 101 Series)

03Astronomy 101: From the Sun and Moon to Wormholes and Warp Drive, Key Theories, Discoveries, and Facts about the Universe (The Adams 101 Series) -

04Φsat-2 begins science phase for AI Earth images

04Φsat-2 begins science phase for AI Earth images -

05Hurricane forecasters are losing 3 key satellites ahead of peak storm season − a meteorologist explains why it matters

05Hurricane forecasters are losing 3 key satellites ahead of peak storm season − a meteorologist explains why it matters -

06Thermodynamic Constraints On The Citric Acid Cycle And Related Reactions In Ocean World Interiors

06Thermodynamic Constraints On The Citric Acid Cycle And Related Reactions In Ocean World Interiors -

07Binary star systems are complex astronomical objects − a new AI approach could pin down their properties quickly

07Binary star systems are complex astronomical objects − a new AI approach could pin down their properties quickly